The AI that is hogging so much of the world’s attention now—and sucking up huge amounts of computing power and electricity—is based on a technique called deep learning. In deep learning linear algebra (specifically, matrix multiplications) and statistics are used to extract, and thus learn, patterns from large datasets during the training process. Large language models (LLMs) like Google’s Gemini or OpenAI’s GPT have been trained on troves of text, images and video and have developed many abilities, including “emergent” ones they were not explicitly trained for (with promising implications, but also worrying ones). More specialised, domain-specific versions of such models now exist for images, music, robotics, genomics, medicine, climate, weather, software-coding and more.

Beyond human comprehension

Rapid progress in the field has led to predictions that AI is “taking over drug development”, that it will “transform every aspect of Hollywood storytelling”, and that it might “transform science itself” (all claims made in this newspaper within the past year). It is said that AI will speed up scientific discovery, automate away the tedium of white-collar jobs and lead to wondrous innovations not yet imaginable. AI is expected to improve efficiency and drive economic growth. It might also displace jobs, endanger privacy and security, and lead to ethical conundrums. It has already outrun human understanding of what it is doing.

Researchers are still getting a handle on what AI will and will not be able to do. So far, bigger models, trained on more data, have proved more capable. This has encouraged a belief that continuing to add more will make for better AI. Research has been done on “scaling laws” that show how model size and the volume of training data interact to improve LLMs. But what is a “better” LLM? Is it one that correctly answers questions, or that comes up with creative ideas?

It is also tricky to predict how well existing systems and processes will be able to make use of AI. So far, the power of AI is most apparent in discrete tasks. Give it images of a rioting mob, and an AI model, trained for this specific purpose, can identify faces in the crowd for the authorities. Give an LLM a law exam, and it will do better than your average high-schooler. But performance on open-ended tasks is harder to evaluate.

The big AI models of the moment are very good at generating things, from poetry to photorealistic images, based on patterns represented in their training data. But such models are less good at deciding which of the things they have generated make the most sense or are the most appropriate in a given situation. They are less good at logic and reasoning. It is unclear whether more data will unlock the capability to reason consistently, or whether entirely different sorts of models will be needed. It is possible that for a long time the limits of AI will be such that the reasoning of humans will be required to harness its power.

Working out what these limits are will matter in areas like health care. Used properly, AI can catch cancer earlier, expand access to services, improve diagnosis and personalise treatment. AI algorithms can outperform human clinicians at such tasks, according to a meta-analysis published in April in npj Digital Medicine. But their training can lead them astray in ways that suggest the value of human intervention.

For example, AI models are prone to exacerbating human bias due to “data distribution shifts”; a diagnostic model may make mistakes if it is trained mostly on images of white people’s skin, and then given an image of a black person’s skin. Combining AI with a qualified human proved the most effective. The paper showed that clinicians using AI were able to increase the share of people they correctly diagnosed with cancer from 81.1% to 86.1%, while also increasing the share of people told correctly they were cancer-free. Because AI models tend to make different mistakes from humans, AI-human partnerships have been seen to outperform both AI and humans alone.

The robotic method

Humans might be less necessary to explore new hypotheses in science. In 2009 Ross King at the University of Cambridge said that his ultimate goal was to design a system that will function as an autonomous lab, or as a “robot scientist”. Dr King’s AI scientist, called Adam, was engineered to come up with hypotheses, use its robotic arm to perform experiments, collect results with its sensors and analyse them. Unlike graduate students and postdocs, Adam never needs to take a break to eat or sleep. But AI systems of this type are (for now) restricted to relatively narrow domains such as drug discovery and materials science. It remains unclear whether they will deliver much more than incremental gains over human-led research.

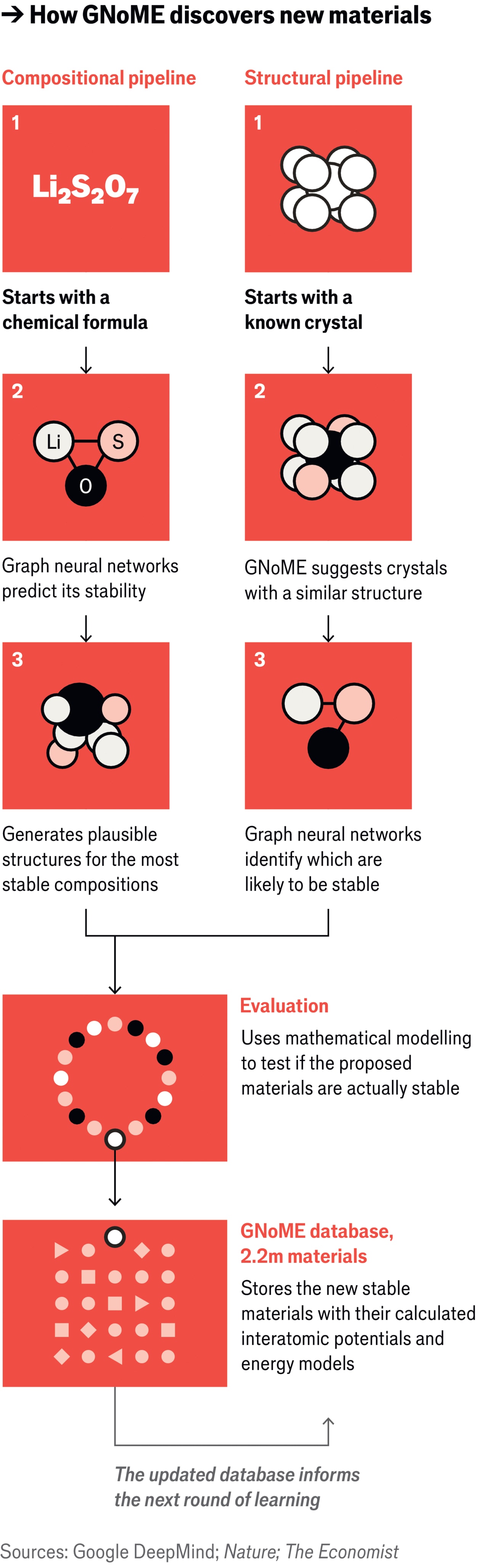

AI techniques have been used in science for decades, to classify, sift and analyse data, and to make predictions. For example, researchers at Project CETI collected a large dataset of whale vocalisations, then trained an AI model on this data to work out which sounds might have meaning. Or consider AlphaFold, a deep neural network developed by Google DeepMind. Trained on a massive protein database, it can quickly and accurately predict the three-dimensional shapes of proteins, a task that once required days of careful experimentation and measurement by humans. GNoME, another AI system developed by DeepMind, is intended to assist in thediscovery of new materials with specific chemical properties (see diagram).

View Full Image

AI can also help make sense of large flows of data that would otherwise be overwhelming for researchers, whether that involves sifting through results from a particle collider to identify new subatomic particles, or keeping up with scientific literature. It is quite impossible for any human, no matter how fastidious a reader, to digest every scientific paper that might be relevant to their work. So-called literature-based discovery systems can analyse these mountains of text to find gaps in research, to combine old ideas in novel ways or even to suggest new hypotheses. It is difficult to determine, though, whether this type of AI work will prove beneficial. AI may not be any better than humans at making unexpected deductive leaps; it may instead simply favour conventional, well-trodden paths of research that lead nowhere exciting.

In education there are concerns that AI—and in particular bots like ChatGPT—might actually be an impediment to original thinking. According to a study done in 2023 by Chegg, an education company, 40% of students around the world used AI to do their school work, mostly for writing. This has led some teachers, professors and school districts to ban AI chatbots. Many fear that their use will interfere with the development of problem-solving and critical-thinking skills through struggling to solve a problem or make an argument. Other teachers have taken an altogether different tack, embracing AI as a tool and incorporating it into assignments. For example, students might be asked to use ChatGPT to write an essay on a topic and then critique it on what it gets wrong.

Wait, did a chatbot write this story?

As well as producing text at the click of a button, today’s generative AI can produce images, audio and videos in a matter of seconds. This has the potential to shake things up in the media business, in fields from podcasting to video games to advertising. AI-powered tools can simplify editing, save time and lower barriers to entry. But AI-generated content may put some artists, such as illustrators or voice actors, at risk. In time, it may be possible to make entire films using AI-driven simulacra of human actors—or entirely artificial ones.

Still, AI models can neither create nor solve problems on their own (or not yet anyway). They are merely elaborate pieces of software, not sentient or autonomous. They rely on human users to invoke them and prompt them, and then to apply or discard the results. AI’s revolutionary capacity, for better or worse, still depends on humans and human judgment.

© 2024, The Economist Newspaper Limited. All rights reserved. From The Economist, published under licence. The original content can be found on www.economist.com