All these things are powered by artificial-intelligence (AI) models. Most rely on a neural network, trained on massive amounts of information—text, images and the like—relevant to how it will be used. Through much trial and error the weights of connections between simulated neurons are tuned on the basis of these data, akin to adjusting billions of dials until the output for a given input is satisfactory.

There are many ways to connect and layer neurons into a network. A series of advances in these architectures has helped researchers build neural networks which can learn more efficiently and which can extract more useful findings from existing datasets, driving much of the recent progress in AI.

Most of the current excitement has been focused on two families of models: large language models (LLMs) for text, and diffusion models for images. These are deeper (ie, have more layers of neurons) than what came before, and are organised in ways that let them churn quickly through reams of data.

LLMs—such as GPT, Gemini, Claude and Llama—are all built on the so-called transformer architecture. Introduced in 2017 by Ashish Vaswani and his team at Google Brain, the key principle of transformers is that of “attention”. An attention layer allows a model to learn how multiple aspects of an input—such as words at certain distances from each other in text—are related to each other, and to take that into account as it formulates its output. Many attention layers in a row allow a model to learn associations at different levels of granularity—between words, phrases or even paragraphs. This approach is also well-suited for implementation on graphics-processing unit (GPU) chips, which has allowed these models to scale up and has, in turn, ramped up the market capitalisation of Nvidia, the world’s leading GPU-maker.

Transformer-based models can generate images as well as text. The first version of DALL-E, released by OpenAI in 2021, was a transformer that learned associations between groups of pixels in an image, rather than words in a text. In both cases the neural network is translating what it “sees” into numbers and performing maths (specifically, matrix operations) on them. But transformers have their limitations. They struggle to learn consistent world-models. For example, when fielding a human’s queries they will contradict themselves from one answer to the next, without any “understanding” that the first answer makes the second nonsensical (or vice versa), because they do not really “know” either answer—just associations of certain strings of words that look like answers.

And as many now know, transformer-based models are prone to so-called “hallucinations” where they make up plausible-looking but wrong answers, and citations to support them. Similarly, the images produced by early transformer-based models often broke the rules of physics and were implausible in other ways (which may be a feature for some users, but was a bug for designers who sought to produce photo-realistic images). A different sort of model was needed.

Not my cup of tea

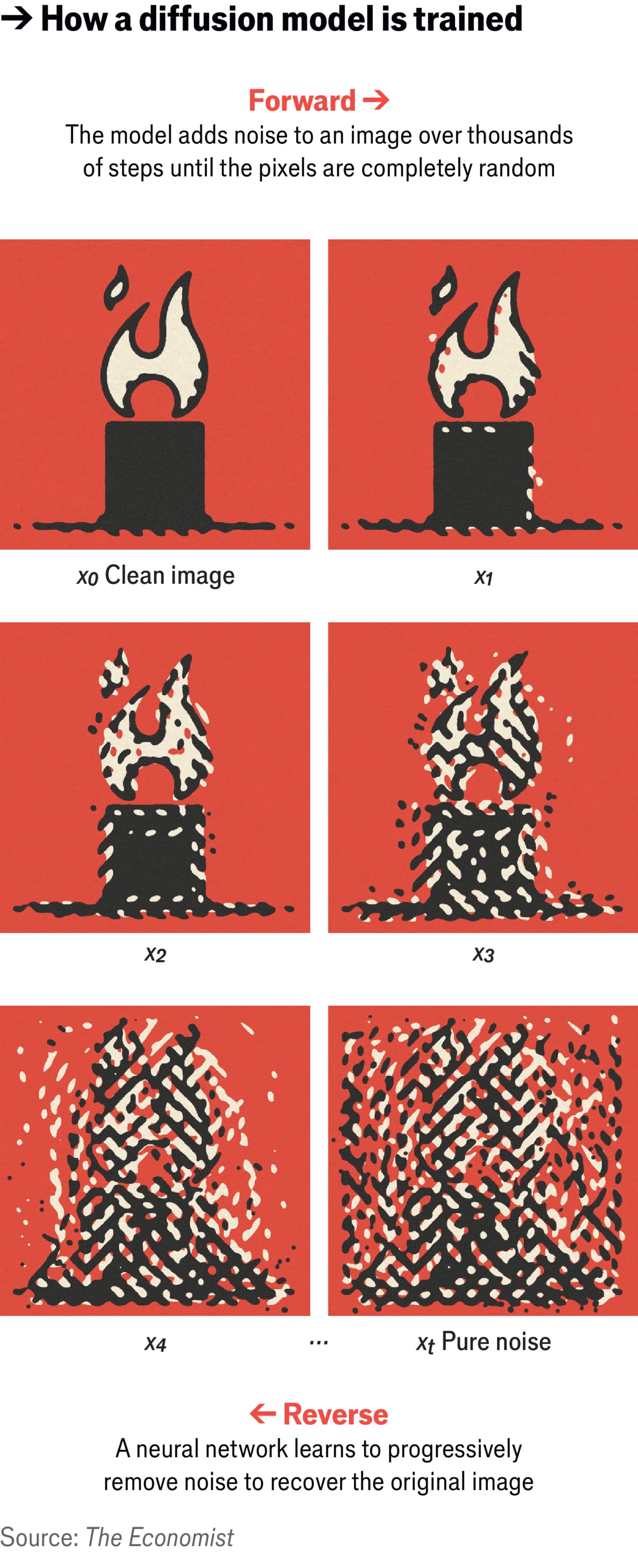

Enter diffusion models, which are capable of generating far more realistic images. The main idea for them was inspired by the physical process of diffusion. If you put a tea bag into a cup of hot water, the tea leaves start to steep and the colour of the tea seeps out, blurring into clear water. Leave it for a few minutes and the liquid in the cup will be a uniform colour. The laws of physics dictate this process of diffusion. Much as you can use the laws of physics to predict how the tea will diffuse, you can also reverse-engineer this process—to reconstruct where and how the tea bag might first have been dunked.In real life the second law of thermodynamics makes this a one-way street; one cannot get the original tea bag back from the cup. But learning to simulate that entropy-reversing return trip makes realistic image-generation possible.

Training works like this. You take an image and apply progressively more blur and noise, until it looks completely random. Then comes the hard part: reversing this process to recreate the original image, like recovering the tea bag from the tea. This is done using “self-supervised learning”, similar to how LLMs are trained on text: covering up words in a sentence and learning to predict the missing words through trial and error. In the case of images, the network learns how to remove increasing amounts of noise to reproduce the original image. As it works through billions of images, learning the patterns needed to remove distortions, the network gains the ability to create entirely new images out of nothing more than random noise.

View Full Image

Most state-of-the-art image-generation systems use a diffusion model, though they differ in how they go about “de-noising” or reversing distortions. Stable Diffusion (from Stability AI) and Imagen, both released in 2022, used variations of an architecture called a convolutional neural network (CNN), which is good at analysing grid-like data such as rows and columns of pixels. CNNs, in effect, move small sliding windows up and down across their input looking for specific artefacts, such as patterns and corners. But though CNNs work well with pixels, some of the latest image-generators use so-called diffusion transformers, including Stability AI’s newest model, Stable Diffusion 3. Once trained on diffusion, transformers are much better able to grasp how various pieces of an image or frame of video relate to each other, and how strongly or weakly they do so, resulting in more realistic outputs (though they still make mistakes).

Recommendation systems are another kettle of fish. It is rare to get a glimpse at the innards of one, because the companies that build and use recommendation algorithms are highly secretive about them. But in 2019 Meta, then Facebook, released details about its deep-learning recommendation model (DLRM). The model has three main parts. First, it converts inputs (such as a user’s age or “likes” on the platform, or content they consumed) into “embeddings”. It learns in such a way that similar things (like tennis and ping pong) are close to each other in this embedding space.

The DLRM then uses a neural network to do something called matrix factorisation. Imagine a spreadsheet where the columns are videos and the rows are different users. Each cell says how much each user likes each video. But most of the cells in the grid are empty. The goal of recommendation is to make predictions for all the empty cells. One way a DLRM might do this is to split the grid (in mathematical terms, factorise the matrix) into two grids: one that contains data about users, and one that contains data about the videos. By recombining these grids (or multiplying the matrices) and feeding the results into another neural network for more number-crunching, it is possible to fill in the grid cells that used to be empty—ie, predict how much each user will like each video.

The same approach can be applied to advertisements, songs on a streaming service, products on an e-commerce platform, and so forth. Tech firms are most interested in models that excel at commercially useful tasks like this. But running these models at scale requires extremely deep pockets, vast quantities of data and huge amounts of processing power.

Wait until you see next year’s model

In academic contexts, where datasets are smaller and budgets are constrained, other kinds of models are more practical. These include recurrent neural networks (for analysing sequences of data), variational autoencoders (for spotting patterns in data), generative adversarial networks (where one model learns to do a task by repeatedly trying to fool another model) and graph neural networks (for predicting the outcomes of complex interactions).

Just as deep neural networks, transformers and diffusion models all made the leap from research curiosities to widespread deployment, features and principles from these other models will be seized upon and incorporated into future AI models. Transformers are highly efficient, but it is not clear that scaling them up can solve their tendencies to hallucinate and to make logical errors when reasoning. The search is already under way for “post-transformer” architectures, from “state-space models” to “neuro-symbolic” AI, that can overcome such weaknesses and enable the next leap forward. Ideally such an architecture would combine attention with greater prowess at reasoning. Right now no human yet knows how to build that kind of model. Maybe someday an AI model will do the job.

© 2024, The Economist Newspaper Limited. All rights reserved. From The Economist, published under licence. The original content can be found on www.economist.com