Marines on the ground, drones in the air and many other sensors were connected over a “mesh” network of advanced radios that allowed each to see, seamlessly, what was happening elsewhere—a set-up that had already allowed the marines to run circles around much larger forces in previous exercises. The data they collected were processed both on the “edge” of the network, aboard small, rugged computers strapped to commando vehicles with bungee cables—and on distant cloud servers, where they had been sent by satellite. Command-and-control software monitored a designated area, decided which drones should fly where, identified objects on the ground and suggested which weapon to strike which target.

The results were impressive. It was apparent that StormCloud was the “world’s most advanced kill chain”, says an officer involved in the experiment, referring to a web of sensors (like drones) and weapons (like missiles) knitted together with digital networks and software to make sense of the data flowing to and fro. Even two years ago, he says, it was “miles ahead”, in terms of speed and reliability, of human officers in a conventional headquarters.

View Full Image

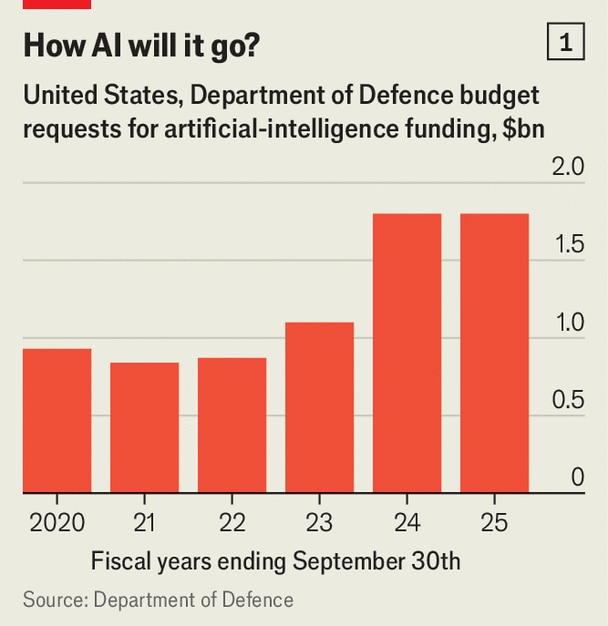

AI-enabled tools and weapons are not just being deployed in exercises. They are also in use on a growing scale in places like Gaza and Ukraine. Armed forces spy remarkable opportunities. They also fear being left behind by their adversaries. Spending is rising fast (see chart 1). But lawyers and ethicists worry that AI will make war faster, more opaque and less humane. The gap between the two groups is growing bigger, even as the prospect of a war between great powers looms larger.

There is no single definition of AI. Things that would once have merited the term, such as the terrain-matching navigation of Tomahawk missiles in the 1980s or the tank-spotting capabilities of Brimstone missiles in the early 2000s, are now seen as workaday software. And many cutting-edge capabilities described as AI do not involve the sort of “deep learning” and large language models underpinning services such as ChatGPT. But in various guises, AI is trickling into every aspect of war.

ProsAIc but gAIinful

That begins with the boring stuff: maintenance, logistics, personnel and other tasks necessary to keep armies staffed, fed and fuelled. A recent study by the RAND Corporation, a think-tank, found that AI, by predicting when maintenance would be needed on A-10C warplanes, could save America’s air force $25m a month by avoiding breakdowns and overstocking of parts (although the AI did worse with parts that rarely failed). Logistics is another promising area. The US Army is using algorithms to predict when Ukrainian howitzers will need new barrels, for instance. AI is also starting to trickle into HR. The army is using a model trained on 140,000 personnel files to help score soldiers for promotion.

At the other extreme is the sharp end of things. Both Russia and Ukraine have been rushing to develop software to make drones capable of navigating to and homing in on a target autonomously, even if jamming disrupts the link between pilot and drone. Both sides typically use small chips for this purpose, which can cost as little as $100. Videos of drone strikes in Ukraine increasingly show “bounding boxes” appearing around objects, suggesting that the drone is identifying and locking on to a target. The technology remains immature, with the targeting algorithms confronting many of the same problems faced by self-driving cars, such as cluttered environments and obscured objects, and some unique to the battlefield, such as smoke and decoys. But it is improving fast.

Between AI at the back-end and AI inside munitions lies a vast realm of innovation, experimentation and technological advances. Drones, on their own, are merely disrupting, rather than transforming, war, argue Clint Hinote, a retired American air-force general, and Mick Ryan, a retired Australian general. But when combined with “digitised command and control systems” (think StormCloud) and “new-era meshed networks of civilian and military sensors” the result, they say, is a “transformative trinity” that allows soldiers on the front lines to see and act on real-time information that would once have been confined to a distant headquarters.

AI is a prerequisite for this. Start with the mesh of sensors. Imagine data from drones, satellites, social media and other sources sloshing around a military network. There is too much to process manually. Tamir Hayman, a general who led Israeli military intelligence until 2021, points to two big breakthroughs. The “fundamental leap”, he says, eight or nine years ago, was in speech-to-text software that enabled voice intercepts to be searched for keywords. The other was in computer vision. Project Spotter, in Britain’s defence ministry, is already using neural networks for the “automated detection and identification of objects” in satellite images, allowing places to be “automatically monitored 24/7 for changes in activity”. As of February, a private company had labelled 25,000 objects to train the model.

Tom Copinger-Symes, a British general, told the House of Lords last year that such systems were “still in the upper ends of research and development rather than in full-scale deployment”, though he pointed to the use of commercial tools to identify, for instance, clusters of civilians during Britain’s evacuation of its citizens from Sudan in early 2023. America seems further along. It began Project Maven in 2017 to deal with the deluge of photos and videos taken by drones in Afghanistan and Iraq.

Maven is “already producing large volumes of computer-vision detections for warfighter requirements”, noted the director of the National Geospatial-Intelligence Agency, which runs the project, in May. The stated aim is for Maven “to meet or exceed human detection, classification, and tracking performance”. It is not there yet—it struggles with tricky cases, such as partly hidden weapons. But The Economist’s tracker of war-related fires in Ukraine is based on machine-learning, entirely automated and operates at a scale that journalists could not match. It has already detected 93,000 probable war-related blazes.

AI can process more than phone calls or pictures. In March the Royal Navy announced that its mine-hunting unit had completed a year of experimentation in the Persian Gulf using a small self-driving boat, the Harrier, whose towed sonar system could search for mines on the seabed and alert other ships or units on land. And Michael Horowitz, a Pentagon official, recently told Defense News, a website, that America, Australia and Britain, as part of their AUKUS pact, had developed a “trilateral algorithm” that could be used to process the acoustic data collected by sonobuoys dropped from each country’s submarine-hunting P-8 aircraft.

In most of these cases, AI is identifying a signal amid the noise or an object amid some clutter: Is that a truck or a tank? An anchor or a mine? A trawler or a submarine? Identifying human combatants is perhaps more complicated and certainly more contentious. In April +972 Magazine, an Israeli outlet, claimed that the Israel Defence Forces (IDF) were using an AI tool known as Lavender to identify thousands of Palestinians as targets, with human operators giving only cursory scrutiny to the system’s output before ordering strikes. The IDF retorted that Lavender was “simply a database whose purpose is to cross-reference intelligence sources”.

In practice, Lavender is likely to be what experts call a decision-support system (DSS), a tool to fuse different data such as phone records, satellite images and other intelligence. America’s use of computer systems to process acoustic and smell data from sensors in Vietnam might count as a primitive DSS. So too, notes Rupert Barrett-Taylor of the Alan Turing Institute in London, would the software used by American spies and special forces in the war on terror, which turned phone records and other data into huge spidery charts that visualised the connections between people and places, with the aim of identifying insurgents or terrorists.

ExplAIn or ordAIn?

What is different is that today’s software benefits from greater computing power, whizzier algorithms (the breakthroughs in neural networks occurred only in 2012) and more data, owing to the proliferation of sensors. The result is not just more or better intelligence. It is a blurring of the line between intelligence, surveillance and reconnaissance (ISR) and command and control (C2)—between making sense of data and acting on it.

Consider Ukraine’s GIS Arta software, which collates data on Russian targets, typically for artillery batteries. It can already generate lists of potential targets “according to commander priorities”, write Generals Hinote and Ryan. One of the reasons that Russian targeting in Ukraine has improved in recent months, say officials, is that Russia’s C2 systems are getting better at processing information from drones and sending it to guns. “By some estimates,” writes Arthur Holland Michel in a paper for the International Committee of the Red Cross (ICRC), a humanitarian organisation, “a target search, recognition and analysis activity that previously took hours could be reduced…to minutes.”

The US Air Force recently asked the RAND Corporation to assess whether AI tools could provide options to a “space warfighter” dealing with an incoming threat to a satellite. The conclusion was that AI could indeed recommend “high-quality” responses. Similarly, DARPA, the Pentagon’s blue-sky research arm, is working on a programme named, with tongue firmly in cheek, the Strategic Chaos Engine for Planning, Tactics, Experimentation and Resiliency (SCEPTER)—to produce recommended actions for commanders during “military engagements at high machine speeds”. In essence, it can generate novel war plans on the fly.

“A lot of the methods that are being employed” in SCEPTER and similar DARPA projects “didn’t even exist two to five years ago”, says Eric Davis, a programme manager at the agency. He points to the example of “Koopman operator theory”, an old and obscure mathematical framework that can be used to analyse complex and non-linear systems—like those encountered in war—in terms of simpler linear algebra. Recent breakthroughs in applying it have made a number of AI problems more tractable.

PrAIse and complAInts

The result of all this is a growing intellectual chasm between those whose job it is to wage war and those who seek to tame it. Legal experts and ethicists argue that the growing role of AI in war is fraught with danger. “The systems we have now cannot recognise hostile intent,” argues Noam Lubell of the University of Essex. “They cannot tell the difference between a short soldier with a real gun and a child with a toy gun…or between a wounded soldier lying slumped over a rifle and a sniper ready to shoot with a sniper rifle.” Such algorithms “cannot be used lawfully”, he concludes. Neural networks can also be fooled too easily, says Stuart Russell, a computer scientist: “You could then take perfectly innocent objects, like lampposts, and print patterns on them that would convince the weapon that this is a tank.”

Advocates of military ai retort that the sceptics have an overly rosy view of war. A strike drone hunting for a particular object might not be able to recognise, let alone respect, an effort at surrender, acknowledges a former British officer involved in policy on ai. But if the alternative is intense shellfire, “There is no surrendering in that circumstance anyway.” Keith Dear, a former officer in the Royal Air Force who now works for Fujitsu, a Japanese firm, goes further. “If…machines produce a lower false positive and false negative rate than humans, particularly under pressure, it would be unethical not to delegate authority,” he argues. “We did various kinds of tests where we compared the capabilities and the achievements of the machine and compared to that of the human,” says the IDF’s General Hayman. “Most tests reveal that the machine is far, far, far more accurate…in most cases it’s no comparison.”

One fallacy involves extrapolating from the anti-terror campaigns of the 2000s. “The future’s not about facial recognition-ing a guy and shooting him from 10,000 feet,” argues Palmer Luckey, the founder of Anduril, one of the firms involved in StormCloud. “It’s about trying to shoot down a fleet of amphibious landing craft in the Taiwan Strait.” If an object has the visual, electronic and thermal signature of a missile launcher, he argues, “You just can’t be wrong…it’s so incredibly unique.” Pre-war modelling further reduces uncertainty: “99% of what you see happening in the China conflict will have been run in a simulation multiple times,” Mr Luckey says, “long before it ever happens.”

“The problem is when the machine does make mistakes, those are horrible mistakes,” says General Hayman. “If accepted, they would lead to traumatic events.” He therefore opposes taking the human “out of the loop” and automating strikes. “It is really tempting,” he acknowledges. “You will accelerate the procedure in an unprecedented manner. But you can breach international law.” Mr Luckey concedes that AI will be least relevant in the “dirty, messy, awful” job of Gaza-style urban warfare. “If people imagine there’s going to be Terminator robots looking for the right Muhammad and shooting him… that’s not how it’s going to work out.”

For its part, the ICRC warns that AI systems are potentially unpredictable, opaque and subject to bias, but accepts they “can facilitate faster and broader collection and analysis of available information…minimising risks for civilians”. Much depends on how the tools are used. If the IDF employed Lavender as reported, it suggests the problem was over-expansive rules of engagement and lax operators, rather than any pathology of the software itself.

For many years experts and diplomats have been wrangling at the United Nations over whether to restrict or ban autonomous weapon systems (AWS). But even defining them is difficult. The ICRC says AWS are those which choose a target based on a general profile—any tank, say, rather than a specific tank. That would include many of the drones being used in Ukraine. The ICRC favours a ban on AWS which target people or behave unpredictably. Britain retorts that “fully” autonomous weapons are those which identify, select and attack targets without “context-appropriate human involvement”, a much higher bar. The Pentagon takes a similar view, emphasising “appropriate levels of human judgment”.

Defining that, in turn, is fiendishly hard. And it is not just to do with the lethal act, but what comes before it. A highly autonomous attack drone may seem to lack human control. But if its behaviour is well understood and it is used in an area where there are known to be legitimate military targets and no civilians, it might pose few problems. Conversely, a tool which merely suggests targets may appear more benign. But commanders who manually approve individual targets suggested by the tool “without cognitive clarity or awareness”, as Article 36, an advocacy group, puts it—mindlessly pushing the red button, in other words—have abdicated moral responsibility to a machine.

The quandary is likely to worsen for two reasons. One is that AI begets AI. If one army is using AI to locate and hit targets more rapidly, the other side may be forced to turn to AI to keep up. That is already the case when it comes to air-defence, where advanced software has been essential for tracking approaching threats since the dawn of the computer age. The other reason is that it will become harder for human users to grasp the behaviour and limitations of military systems. Modern machine learning is not yet widely used in “critical” decision-support systems, notes Mr Holland Michel. But it will be. And those systems will undertake “less mathematically definable tasks”, he notes, such as predicting the future intent of an adversary or even his or her emotional state.

There is even talk of using AI in nuclear decision-making. The idea is that countries could not only fuse data to keep track of incoming threats (as has happened since the 1950s) but also retaliate automatically if the political leadership is killed in a first strike. The Soviet Union worked on this sort of “dead hand” concept during the cold war as part of its “Perimetr” system. It remains in use and is now rumoured to be reliant on ai-driven software, notes Leonid Ryabikhin, a former Soviet air-force officer and arms-control expert. In 2023 a group of American senators even introduced a new bill: the “Block Nuclear Launch by Autonomous Artificial Intelligence Act”. This is naturally a secretive area and little is known about how far different countries want to go. But the issue is important enough to have been high up the agenda for presidential talks last year between Joe Biden and Xi Jinping.

RemAIning in the loop

For the moment, in conventional wars, “there’s just about always time for somebody to say yes or no,” says a British officer. “There’s no automation of the whole kill chain needed or being pushed.” Whether that would be true in a high-intensity war with Russia or China is less clear. In “The Human Machine Team”, a book published under a pseudonym in 2021, Brigadier-General Yossi Sariel, the head of an elite Israeli military-intelligence unit, wrote that an AI-enabled “human-machine team” could generate “thousands of new targets every day” in a war. “There is a human bottleneck,” he argued, “for both locating the new targets and decision-making to approve the targets.”

In practice, all these debates are being superseded by events. Neither Russia nor Ukraine pays much heed to whether a drone is an “autonomous” weapon system or merely an “automated” one. Their priority is to build weapons that can evade jamming and destroy as much enemy armour as possible. False positives are not a big concern for a Russian army that has bombed more than 1,000 Ukrainian health facilities to date, nor for a Ukrainian army that is fighting for its survival.

Hanging over this debate is also the spectre of a war involving great powers. NATO countries know they might have to contend with a Russian army that might, once this war ends, have extensive experience of building AI weapons and testing them on the battlefield. China, too, is pursuing many of the same technologies as America. Chinese firms make the vast majority of drones sold in America, be it as consumer goods or for industrial purposes. The Pentagon’s annual report on Chinese military power observes that in 2022 the People’s Liberation Army (PLA) began discussing “MultiDomain Precision Warfare”: the use of “big data and artificial intelligence to rapidly identify key vulnerabilities” in American military systems, such as satellites or computer networks, which could then be attacked.

View Full Image

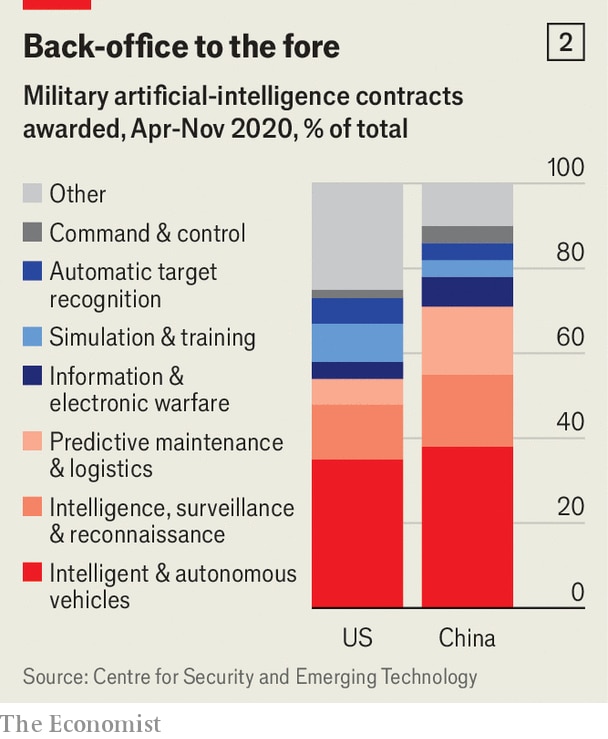

The question is who has the upper hand. American officials once fretted that China’s lax privacy rules and control over the private sector would give the PLA access to more and better data, which would result in superior algorithms and weapons. Those concerns have mellowed. A recent study of procurement data by the Centre for Security and Emerging Technology (CSET) at Georgetown University found that America and China are “devoting comparable levels of attention to a similar suite of AI applications” (see chart 2).

Moreover, America has pulled ahead in cutting-edge models, thanks in part to its chip restrictions. In 2023 it produced 61 notable machine-learning models and Europe 25, according to Epoch AI, a data firm. China produced 15. These are not the models in current military systems, but they will inform future ones. “China faces significant headwinds in…military AI,” argues Sam Bresnick of CSET. It is unclear whether the PLA has the tech talent to create world-class systems, he points out, and its centralised decision-making might impede AI decision-support. Many Chinese experts are also worried about “untrustworthy” ai. “The PLA possesses plenty of lethal military power,” notes Jacob Stokes of CNAS, another think-tank, “but right now none of it appears to have meaningful levels of autonomy enabled by AI”.

China’s apparent sluggishness is part of a broader pattern. Some, like Kenneth Payne of King’s College London, think AI will transform not just the conduct of war, but its essential nature. “This fused machine-human intelligence would herald a genuinely new era of decision-making in war,” he predicts. “Perhaps the most revolutionary change since the discovery of writing, several thousand years ago.” But even as such claims grow more plausible, the transformation remains stubbornly distant in many respects.

“The irony here is that we talk as if AI is everywhere in defence, when it is almost nowhere,” notes Sir Chris Deverell, a retired British general. “The penetration of AI in the UK Ministry of Defence is almost zero…There is a lot of innovation theatre.” A senior Pentagon official says that the department has made serious progress in improving its data infrastructure—the pipes along which data move—and in unmanned aircraft that work alongside warplanes with crews. Even so, the Pentagon spends less than 1% of its budget on software—a statistic frequently trotted out by executives at defence-tech startups. “What is unique to the [Pentagon] is that our mission involves the use of force, so the stakes are high,” says the official. “We have to adopt AI both quickly and safely.”

Meanwhile, Britain’s StormCloud is getting “better and better”, says an officer involved in its development, but the project has moved slowly because of internal politics and red tape around the accreditation of new technology. Funding for its second iteration was a paltry £10m, pocket money in the world of defence. The plan is to use it on several exercises this year. “If we were Ukraine or genuinely worried about going to war any time soon,” the officer says, “we’d have spent £100m-plus and had it deployed in weeks or months.”

© 2024, The Economist Newspaper Limited. All rights reserved. From The Economist, published under licence. The original content can be found on www.economist.com