It is a question ever more readers of scientific papers are asking. Large language models (LLMs) are now more than good enough to help write a scientific paper. They can breathe life into dense scientific prose and speed up the drafting process, especially for non-native English speakers. Such use also comes with risks: LLMs are particularly susceptible to reproducing biases, for example, and can churn out vast amounts of plausible nonsense. Just how widespread an issue this was, though, has been unclear.

In a preprint posted recently on arXiv, researchers based at the University of Tübingen in Germany and Northwestern University in America provide some clarity. Their research, which has not yet been peer-reviewed, suggests that at least one in ten new scientific papers contains material produced by an LLM. That means over 100,000 such papers will be published this year alone. And that is a lower bound. In some fields, such as computer science, over 20% of research abstracts are estimated to contain LLM-generated text. Among papers from Chinese computer scientists, the figure is one in three.

Spotting LLM-generated text is not easy. Researchers have typically relied on one of two methods: detection algorithms trained to identify the tell-tale rhythms of human prose, and a more straightforward hunt for suspicious words disproportionately favoured by LLMs, such as “pivotal” or “realm”. Both approaches rely on “ground truth” data: one pile of texts written by humans and one written by machines. These are surprisingly hard to collect: both human- and machine-generated text change over time, as languages evolve and models update. Moreover, researchers typically collect LLM text by prompting these models themselves, and the way they do so may be different from how scientists behave.

View Full Image

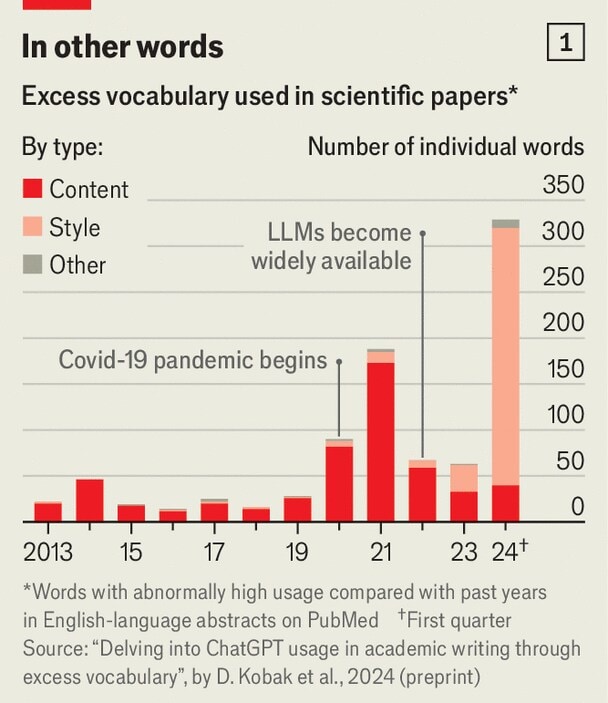

The latest research by Dmitry Kobak, at the University of Tübingen, and his colleagues, shows a third way, bypassing the need for ground-truth data altogether. The team’s method is inspired by demographic work on excess deaths, which allows mortality associated with an event to be ascertained by looking at differences between expected and observed death counts. Just as the excess-deaths method looks for abnormal death rates, their excess-vocabulary method looks for abnormal word use. Specifically, the researchers were looking for words that appeared in scientific abstracts with a significantly greater frequency than predicted by that in the existing literature (see chart 1). The corpus which they chose to analyse consisted of the abstracts of virtually all English-language papers available on PubMed, a search engine for biomedical research, published between January 2010 and March 2024, some 14.2m in all.

The researchers found that in most years, word usage was relatively stable: in no year from 2013-19 did a word increase in frequency beyond expectation by more than 1%. That changed in 2020, when “SARS”, “coronavirus”, “pandemic”, “disease”, “patients” and “severe” all exploded. (Covid-related words continued to merit abnormally high usage until 2022.)

View Full Image

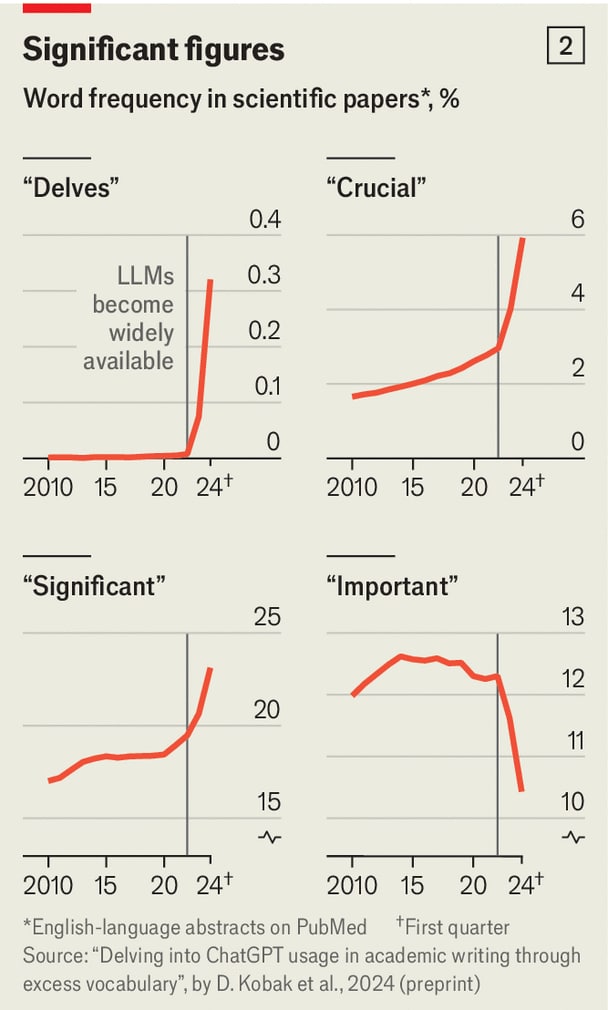

By early 2024, about a year after LLMs like ChatGPT had become widely available, a different set of words took off. Of the 774 words whose use increased significantly between 2013 and 2024, 329 took off in the first three months of 2024. Fully 280 of these were related to style, rather than subject matter. Notable examples include: “delves”, “potential”, “intricate”, “meticulously”, “crucial”, “significant”, and “insights” (see chart 2).

The most likely reason for such increases, say the researchers, is help from LLMs. When they estimated the share of abstracts which used at least one of the excess words (omitting words which are widely used anyway), they found that at least 10% probably had LLM input. As PubMed indexes about 1.5m papers annually, that would mean that more than 150,000 papers per year are currently written with LLM assistance.

View Full Image

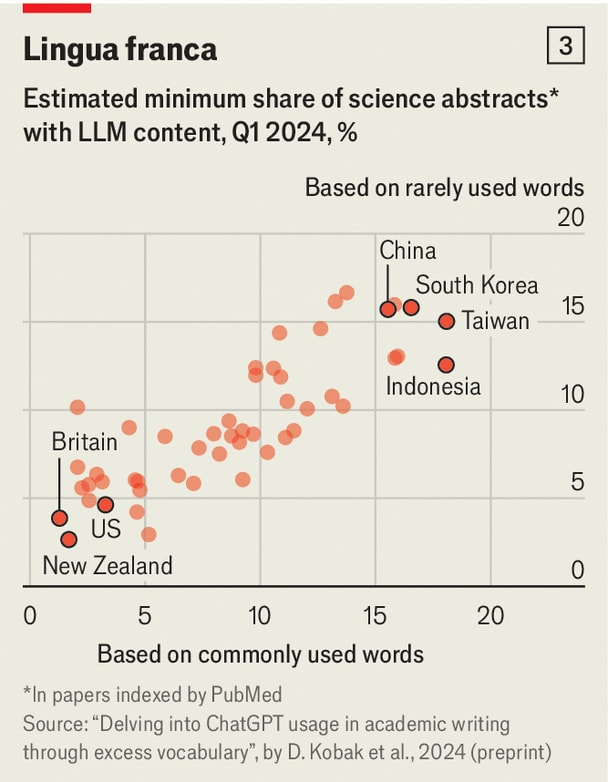

This seems to be more widespread in some fields than others. The researchers’ found that computer science had the most use, at over 20%, whereas ecology had the least, with a lower bound below 5%. There was also variation by geography: scientists from Taiwan, South Korea, Indonesia and China were the most frequent users, and those from Britain and New Zealand used them least (see chart 3). (Researchers from other English-speaking countries also deployed LLMs infrequently.) Different journals also yielded different results. Those in the Nature family, as well as other prestigious publications like Science and Cell, appear to have a low LLM-assistance rate (below 10%), while Sensors (a journal about, unimaginatively, sensors), exceeded 24%.

The excess-vocabulary method’s results are roughly consistent with those from older detection algorithms, which looked at smaller samples from more limited sources. For instance, in a preprint released in April 2024, a team at Stanford found that 17.5% of sentences in computer-science abstracts were likely to be LLM-generated. They also found a lower prevalence in Nature publications and mathematics papers (LLMs are terrible at maths). The excess vocabulary identified also fits with existing lists of suspicious words.

Such results should not be overly surprising. Researchers routinely acknowledge the use of LLMs to write papers. In one survey of 1,600 researchers conducted in September 2023, over 25% told Nature they used LLMs to write manuscripts. The largest benefit identified by the interviewees, many of whom studied or used AI in their own work, was to help with editing and translation for those who did not have English as their first language. Faster and easier coding came joint second, together with the simplification of administrative tasks; summarising or trawling the scientific literature; and, tellingly, speeding up the writing of research manuscripts.

For all these benefits, using LLMs to write manuscripts is not without risks. Scientific papers rely on the precise communication of uncertainty, for example, which is an area where the capabilities of LLMs remain murky. Hallucination—whereby LLMs confidently assert fantasies—remains common, as does a tendency to regurgitate other people’s words, verbatim and without attribution.

Studies also indicate that LLMs preferentially cite other papers that are highly cited in a field, potentially reinforcing existing biases and limiting creativity. As algorithms, they can also not be listed as authors on a paper or held accountable for the errors they introduce. Perhaps most worrying, the speed at which LLMs can churn out prose risks flooding the scientific world with low-quality publications.

Academic policies on LLM use are in flux. Some journals ban it outright. Others have changed their minds. Up until November 2023, Science labelled all LLM text as plagiarism, saying: “Ultimately the product must come from—and be expressed by—the wonderful computers in our heads.” They have since amended their policy: LLM text is now permitted if detailed notes on how they were used are provided in the method section of papers, as well as in accompanying cover letters. Nature and Cell also allow its use, as long as it is acknowledged clearly.

How enforceable such policies will be is not clear. For now, no reliable method exists to flush out LLM prose. Even the excess-vocabulary method, though useful at spotting large-scale trends, cannot tell if a specific abstract had LLM input. And researchers need only avoid certain words to evade detection altogether. As the new preprint puts it, these are challenges that must be meticulously delved into.

© 2024, The Economist Newspaper Limited. All rights reserved. From The Economist, published under licence. The original content can be found on www.economist.com